PernixData FVP 2.0 Overview

![]() PernixData released FVP 2.0 on October 1st, 2014, adding a number of significant new features to the already award winning line up. If you are new to PernixData, FVP is a scale out VM acceleration solution which accelerates both reads and writes using server-side acceleration resources to increase IOPS and to reduce latency. A key feature that makes FVP unique is the ability to accelerate write operations without pinning virtual machines to a single host, and doing so in such a way that writes are protected in case of media or host failure. I will cover the basics of the new features here but you can learn more about FVP on the PernixData site.

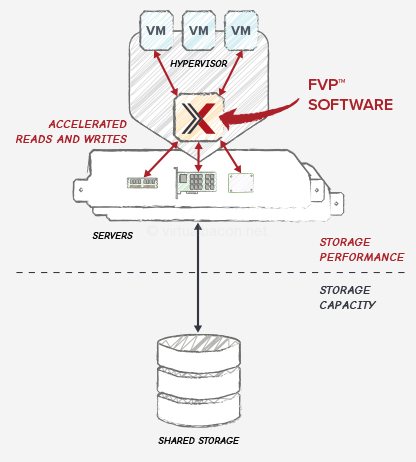

PernixData released FVP 2.0 on October 1st, 2014, adding a number of significant new features to the already award winning line up. If you are new to PernixData, FVP is a scale out VM acceleration solution which accelerates both reads and writes using server-side acceleration resources to increase IOPS and to reduce latency. A key feature that makes FVP unique is the ability to accelerate write operations without pinning virtual machines to a single host, and doing so in such a way that writes are protected in case of media or host failure. I will cover the basics of the new features here but you can learn more about FVP on the PernixData site.

New features in FVP 2.0

NFS support

Adding to pre-existing support of block protocols (iSCSI, FC, FCoE, DAS), FVP 2.0 adds support for NFS, rounding out support of all VMware-supported storage protocols. This means that FVP is now a single tool that can be used in any VMware environment.

Adding to pre-existing support of block protocols (iSCSI, FC, FCoE, DAS), FVP 2.0 adds support for NFS, rounding out support of all VMware-supported storage protocols. This means that FVP is now a single tool that can be used in any VMware environment.

Distributed Fault Tolerant Memory (DFTM)

While acceleration previously required using flash media such as SSD, PCIe flash, or NVMe (public ESX drivers should be out soon), you can now allocate a portion of host RAM to use to accelerate both reads and writes. While vSphere 5.5 U2 supports 6 TB of RAM per host, FVP now allows you to allocate up to 1 TB per host to an FVP cluster. With VMware support for 32 nodes in a cluster that means that you can allocate up to 32 TB of RAM per cluster for acceleration. As with flash, writes are protected by synchronously replicating them to one or more peers within the cluster.

User-Defined Fault Domains

By default FVP automatically decides where to protect writes within the cluster. This is still the default though FVP now adds the ability for the VMware administrator to create fault domains and to map groups of ESXi hosts in different racks, in different blade chassis enclosures, on different power, even in different data centers in the case of stretched clusters (and conversely this feature can be used to keep writes in the same data center if latency is too high between geographic sites), giving the administrator very granular control over how and where writes are protected.

Adaptive Network Compression

FVP works on gigabit or better networks, the same as vMotion. While write back performance will obviously be better on faster networks (yes, 10 Gb is faster than 1 Gb), many current and future customers still have gigabit networking. This can be used deliver great improvements, but the improvement will cap out sooner than it would on faster networks. Adaptive network compression automatically detects when the network used for FVP is running at 1 Gb and compresses data to be protected in order to maximize throughout and to lower latency, using only 1 to 2% CPU. Pete Koehler, a PernixData customer, shows graphs of the effect of adaptive network compression from his own production environment on his blog.

Other tidbits

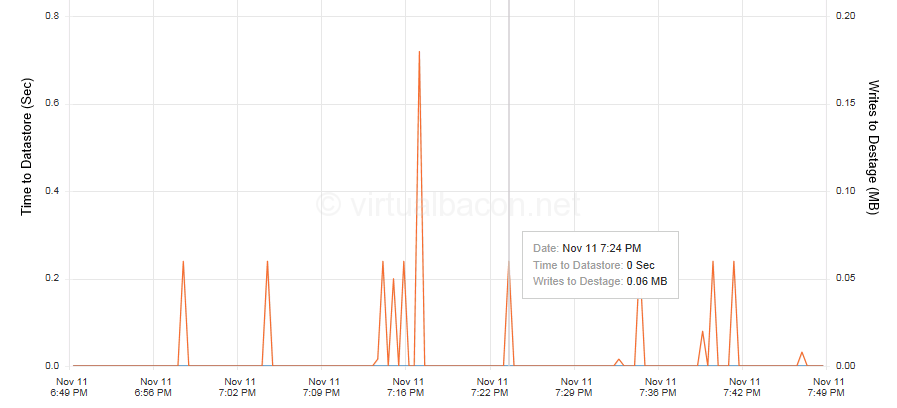

Time to Datastore

An overlooked but awesome feature is the new Write Back Destaging graph. FVP can handle seamless transitions to flush out the write buffer for data services such as snapshots and replications, but an admin might want to understand how data flows through the buffer at other times. The new graph does just that by displaying how long it takes for data to arrive on the backend storage. In most environments this will be anywhere from milliseconds to seconds, occasionally higher under prolonged write intensive load (such as when I run write heavy IOmeter tests in my home lab backed by two spindle). This gives the admin even greater insight into how data is flowing under the covers at the VM, host, and cluster level (like all of our other graphs).

8 hour view

That's right - the 8 hour view. While FVP previously had presets for 10 minutes, 1 hour, 1 day, 1 week, 1 month, and a custom time range, 1 hour was often too short and 1 day was often too long. Having to specify a custom time range did the trick but took a little more time. Many admins will find the 8 hour view to be just right for much of the business day, covering a period which includes the beginning of the business hours to the current time. This small addition makes a lot of people happy.

Simple upgrade

PernixData customers running FVP 1.5 will be pleased to know that the management server component can be upgraded in place within a few minutes. Some customers have jokingly complained about how painless the process is, asking for more clicks and dialog boxes. 🙂 As before, the host extensions can be installed by using VMware Update Manager to create a new patch and simply remediating the hosts, as with any other VMware patch. This is possible because PernixData FVP is certified through VMware's PVSP program. For the SSH shell inclined the extension can also be installed with a single esxcli command. The upgrade process is simple and is described in the upgrade guide.

Unchanged

- As with previous versions of FVP new version supports vSphere 5.0 and above.

- FVP can be managed both through the web client and through the vSphere client. The plugin extensions are automatically registered with vCenter upon installation.

- Acceleration of both reads and writes (with fault tolerance) is done within the kernel space. This provides the shortest IO path possible.

- Acceleration is done in place. Your virtual machines stay on the datastore where they live today. No changes are required.

- FVP works with any hardware on the VMware HCL.

- Snapshots, DRS (in fully automated mode), HA, linked clones, vSphere Replication, etc. all continue to work as they do today.

- Because FVP accelerates both reads and writes within the kernel space, while providing fault tolerance, pretty much any enterprise application can benefit from PernixData FVP. It does not matter whether you are looking to improve performance for enterprise databases such as Oracle, SQL, MySQL, or any other database, SharePoint, Domain Controllers, ERP applications, web servers, or nearly any other application that runs on vSphere.

- Performance is decoupled from capacity. VM and application performance is delivered primarily from the front-end, with the backend storage doing what it does best, which is deliver capacity and provide data services.

Disclaimer: I am a former PernixData customer who liked the product so much that I joined the company. The great thing about FVP being software is that you don't have to take my word for it. Try it out for yourself in your own environment, for free, for 30 days.