Blade Servers: Boot options for leveraging server-side flash

When planning an install or a re-install of ESXi there are a number of options available. In this post I will focus on blade servers since the form factor, while convenient in many ways, can be a bit limiting due to the limited number of drive bays. If you want to leverage server-side flash for acceleration then keeping the drive bays available to do so is important. This post will not be a step-by-step guide on how to install ESXi on the various boot media. There are plenty of other blog posts that describe the process, as well as instructions on the VMware web site (SD/thumb drive and install guide).

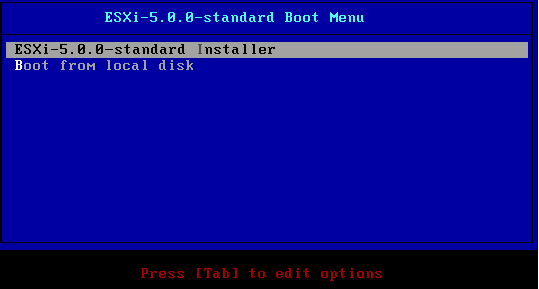

ESXi boot options:

- Boot from local disk

- Boot from SAN

- Boot from SD card/thumb drive

- Boot from network (Auto-deploy)

Boot from local disk

In the case of blade servers I don't usually recommend booting from local disk. It is a perfectly valid option of course but the number of drive bays are limited so why waste one or both of them for ESXi? Many people don't know or forget that once ESXi is loaded it runs in memory. The boot disk is no longer necessary except for configuration changes and logging, and the logging is easily resolved by configuring a remote scratch location (also described at link above) and/or syslog.

Boot from SAN

Boot from SAN can be a practical deployment method. The drive bays within the blade are not tied up by the hypervisor and when combined with address virtualization in the chassis (WWN or IQN), the blade becomes an easily replaceable device. In case of failure simply pull out the blade and replace it with another one and it will boot from the SAN the same as the previous one did.

Boot from SD/Thumb drive

In my own deployment I chose to boot from SD card. This removed the need to create and present additional LUNs to the blade environment, and installation and upgrades of ESXi is quick and easy. When booting this way don't forget to configure remote logging since the scratch space will otherwise be on a ramdisk will eventually fill up and which does not persist across reboots. It is never good to not have logs to reference later if you need to do troubleshooting.

Boot from Network

Booting from the network using Auto deploy is another way to avoid wasting local drive bays. In this case the blade is completely stateless and gets a fresh installation every time that it is rebooted.

Why do I bring this up?

When talking to people about doing server-side flash acceleration on blade servers I occasionally run into folks who have ESXi installed on two drives in a RAID 1 mirror. They are sometime hesitant to either break the mirror to free up a disk slot or to boot using one of the other methods listed above because they believe that mirroring the drives provides additional protection to the host. While this might be true for many other operating systems let me remind you again that ESXi runs in memory. That means that even if the boot device fails while the hypervisor is running ESXi will keep on operating normally. You could fill up the ramdisk and not have current logs, but this is easily mitigated by configuring remote logging. Centralized remote logging is a good idea anyway.

A non-production environment that I worked with actually had a host which had been running with a failed SD card for some time. We had not made it to the remote datacenter to replace it and the host and all the VMs on it all continued to run just fine. When we were ready to replace the failed boot device we will simply put the host into maintenance mode and migrated the VMs to other hosts so the work could be done without disruption.

Summary:

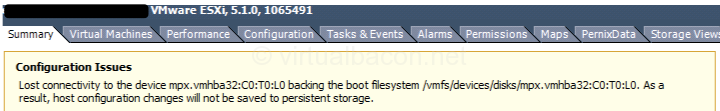

If you are running blade servers, or even rack mount servers where you need another one or two drive bays, consider a boot option other than local disk to free up disk slots for other uses, such as to leverage server-side flash. Running a local mirror in the case of ESXi doesn't offer a lot of extra protection since the hypervisor runs in memory once the server has booted up. The failure of storage will cause an alert to be shown in the vSphere client, and on your monitoring tool depending on your configuration, and you can take the appropriate action for your environment. Don't forget to configure a persistent scratch location to retain logs across reboots.